Digital Touch

What are Haptics?

Haptics refers to technologies that allow users to experience tactile sensations and interactions within digital environments, simulating touch to enhance immersion, skill development, or realism. In Virtual and Augmented Reality, haptics isn’t focused on perfectly replicating tactile sensations—since a fully realistic HD haptic display doesn’t yet exist. Instead, it works as a complementary layer within multisensory experiences, enhancing coherence and immersion by synchronizing tactile feedback with visuals and audio. This integration creates a more convincing and engaging virtual environment, allowing users to feel actions, interactions, and environmental elements in intuitive and meaningful ways, even if the sensations aren’t entirely lifelike. This seamless blend of sensory inputs is essential for enriching VR and AR applications. interactions, and environmental elements in ways that support intuitive engagement.

What It Can Do (The Technology Behind the Magic)

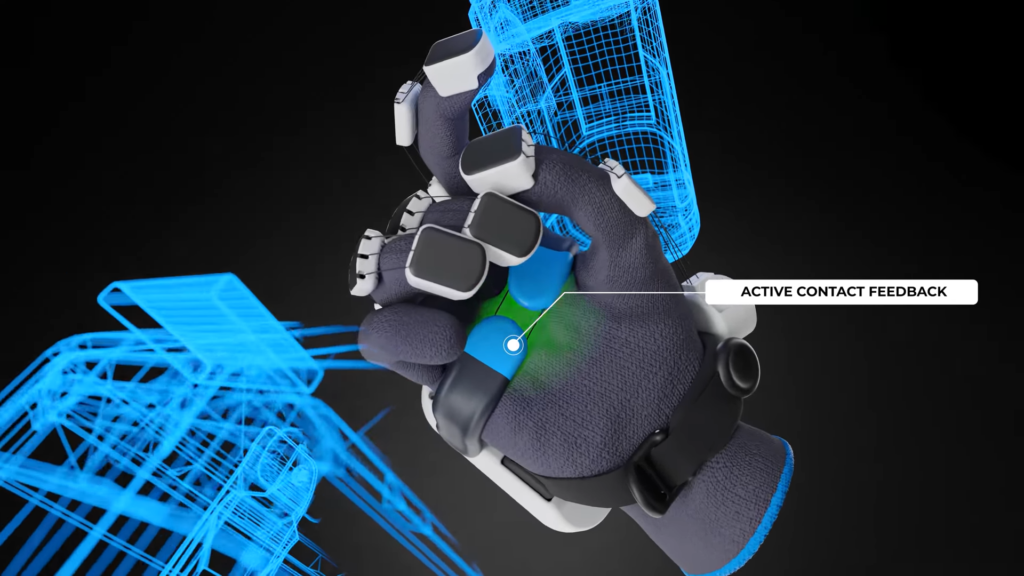

Haptic technologies encompass a range of modalities designed to simulate touch through kinesthetic and skin-based feedback, each offering varying levels of fidelity and interaction richness. Kinesthetic feedback, which targets the perception of movement and force, can be divided into active force-feedback (force-feedback applied to a finger or arm that actively pushes back) and passive force-feedback (user enabled feedback where the force-feedback comes from resistive forces.). This feedback is essential in applications requiring force simulation, such as virtual tool manipulation. Meanwhile, skin-based feedback addresses sensations directly on the skin through modalities like vibrotactile feedback (using vibrations to convey texture or impact), contact feedback (providing localized pressure or skin-stretch to simulate touch or object boundaries), and temperature feedback (replicating thermal sensations to simulate heat or cold). The fidelity of these sensations depends on the resolution and complexity of the feedback—how accurately and richly it can simulate the desired touch experience. Devices with more touch points, or higher haptic resolution, offer greater detail, enabling nuanced interactions like distinguishing textures or precise object shapes. Advanced haptic systems that integrate multiple modalities and high fidelity are key to creating immersive, believable experiences, particularly in virtual and augmented reality environments.

Advantages (and challenges we solve)

Haptics plays a crucial role in VR and AR, addressing one of the most frequent user complaints: the lack of a sense of touch. Without tactile feedback, interactions can feel incomplete, undermining the sense of presence and immersion. While visuals and audio craft a captivating environment, adding the sense of touch transforms the experience, making it truly immersive. Haptics bridges this gap by enabling users to feel and interact with virtual objects, enhancing the sense of embodiment and making the experience more natural and intuitive. This tactile engagement not only deepens immersion in XR environments but also plays a crucial role in VR training scenarios by providing realistic feedback that mimics real-world conditions. This multi-sensory integration improves learning retention, as users can practice skills with a physicality that fosters muscle memory and better understanding. By addressing this critical sensory gap, haptics transforms VR and AR into more immersive, effective, and engaging platforms for entertainment, education, and professional training.

Key Features

Developing haptics for XR (Extended Reality) requires a comprehensive set of features to ensure the tactile sensations are integrated seamlessly and enhance the overall multisensory experience. Here are the key features needed:

1. Scenario Development

- Crafting a detailed narrative or use-case scenario that outlines the purpose and context of haptic interactions.

- Defining how users will engage with the environment and objects, and what sensations they should experience.

2. Interaction Drafting

- Mapping out all potential interactions users can have with the virtual or augmented environment.

- Identifying the specific touchpoints, forces, and feedback mechanisms required for each interaction.

3. Interaction System

- Building a framework that governs user-object interactions, including tracking hand movements, gestures, and tool usage.

- Ensuring interactions are natural and intuitive while being synchronized with other sensory inputs.

4. Collision System

- Developing a robust system to detect when and how virtual objects collide with each other or with the user.

- Accurately modeling the physical properties of objects to provide appropriate haptic feedback, such as weight, texture, or impact.

5. Haptic Design Tool

- Providing designers with software to create, customize, and test haptic effects.

- Allowing for fine-tuning of feedback, including vibration patterns, force profiles, and thermal cues.

6. Haptic Rendering Tool

- Translating virtual interactions into real-time haptic feedback through rendering algorithms.

- Ensuring feedback is synchronized with visual and auditory cues for a cohesive experience.

7. Compatible Haptic Devices

- Using hardware capable of delivering a range of haptic sensations, such as:

- Controllers: For handheld vibrations and button feedback.

- Force-Feedback Gloves: To simulate object manipulation, resistance, and pressure.

- Haptic Vests: For full-body sensations like impacts, environmental cues, or temperature changes.

- Ensuring all devices can interpret a unified signal format for consistent feedback across devices.

8. File Format and Signal Encoding

- Developing a standardized file format for encoding haptic signals, ensuring compatibility across multiple devices.

- Implementing compression and decompression methods to efficiently store and transmit haptic data without losing quality.

9. Multisensory Integration

- Designing haptics to function as part of a multisensory “orchestra” with visuals and audio.

- Synchronizing feedback to reinforce immersion and enhance the user’s sense of presence and embodiment.

These features, when combined, create a robust ecosystem for haptics in XR, enabling realistic, unified, and scalable tactile experiences.

The Technology Behind It ( and the main components)

Haptics in XR is predominantly embedded into wearable devices like haptic gloves and vests, as well as grounded tools such as haptic pens and robotic arms, enabling users to experience a wide range of tactile sensations. Wearables provide localized feedback, with gloves simulating texture, pressure, and resistance for hand interactions, while vests deliver broader sensations like impacts or vibrations across the torso, enhancing immersion. Grounded devices, such as haptic pens, offer precision for tasks like drawing or surgical training, while robotic arms provide kinesthetic feedback by exerting forces on the user’s hand or arm. All these technologies rely on actuators, the components responsible for generating physical sensations. Different actuator types—such as vibrotactile motors, piezoelectric actuators, pneumatic systems, or electroactive polymers—offer varying levels of fidelity and richness, allowing for tailored sensations. For example, vibrotactile actuators excel in simulating texture, while pneumatic actuators can recreate more forceful or volumetric sensations, making haptics adaptable to a wide range of applications and interaction needs.

Impact (where & how)

Haptics, while still in its early stages, has a wide range of use cases that demonstrate its potential across industries. From enhancing immersion in gaming and entertainment to revolutionizing training in fields like healthcare, aviation, and industrial operations, haptics allows users to interact with virtual environments in ways that feel tangible and intuitive. However, one of the biggest challenges in advancing haptics lies in its complexity: it requires five distinct technologies to address the wide and varied sensory landscape of the human body, from fingertips to torso. Unlike audio and video, which benefit from universal formats like MP3 and MP4 that any device can interpret and play, haptics lacks a standardized signal format, making interoperability between devices a major hurdle. For the first time, the Presence project is tackling this challenge by implementing a unified haptic coding format, enabling three leading device manufacturers—covering haptic gloves, vests, and controllers—to interpret and play the same signals. This breakthrough represents a critical step toward standardization, allowing haptic experiences to be seamlessly shared across devices, opening the door to greater scalability, consistency, and innovation in haptic applications.

Visuals and where to find them

Introducing the Nova 2 Haptic Glove

Skinetic – Feel The Difference