By Joan Llobera – ARTANIM

We rarely think about how we move. We just do it. However, creating a movement controller that matches our expectations is far more challenging than we would imagine (see Physics-based character animation and human motor control). Any roboticist will tell you.

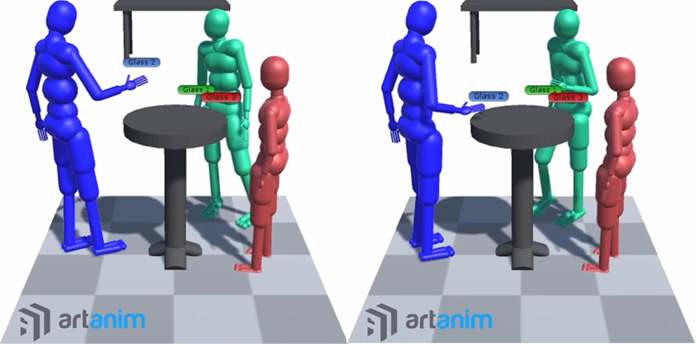

Figure 1: two frames of a motion capture session where 3 people engage in social interaction while drinking and talking. We still cannot reproduce with physics-based movement controllers the interactive gestures and the spatial behavior involved in such a casual scenario. Even less if we want to consider the manipulation of objects like glasses and a table.

Toward Lifelike Characters: Exploring Physical and Social Interaction in VR

At Artanim we are exploring the use of movement controllers similar to the ones used to control robots, although doing so in physics simulations instead of in the real world. Our idea is that running these simulations in Virtual Reality (VR) will allow us to create characters with richer real-time reactivity. We believe this may be a step forward to improve the way autonomous virtual characters engage with their virtual environment, as well as a research path to provide characters that show lifelike behavior and some degree of nuanced interaction with VR users.

Cognitive psychology and communication science has shown that people build rapport between them, in good part, through subtle behavioral cues, interpersonal coordination and loose but important rules relative to social space. Can we embed physics-based controllers with computational models that reproduce these mechanisms? (see Playing the mirror game in virtual reality with an autonomous character). What is the impact of implementing these computational models in VR experiences?

From Motion Control to Meaningful Gestures: Building Socially Intelligent Avatars

There are several technical challenges to address before we can explore questions related to interpersonal coordination and human-humanoid cooperation. The movement controllers need to be reliable, stable, solid, and easy to train, but also flexible enough to match the needs of a specific VR production scenario. Every day we engage with physical objects in rich and subtle ways: we grab a cup by its handle intuitively balancing it, use it to pour tea in it and drink it readapting dynamically to the movements of the liquid in it, then use the cup to push some object on the table, before leaving it there for a while. However, manipulating objects in tasks that require rich contacts is still a matter of fundamental research, in robotics and control theory.

In addition, it is enough that we drink tea with someone else around for us to be willing to share the moment with them. We will change the dynamics involved in our gestures to show we take their interests and well-being into account. Can we create realistic social interactions among avatars and VR characters? This general question, focused specifically on body-centered interaction and interactive gestures, is what we are trying to answer in the EU Project PRESENCE. Need more info? Contact Joan Ilobera.