by Ke Li – Universität Hamburg

Introduction

A significant challenge in VR is creating IVAs that move and interact with physical believability. The core of this issue lies in a fundamental disconnect between IVA’s cognition and motor control. Typically, an agent’s high-level decision-making (what to do) is separate from its physical execution (how to move). This limitation leads to a reliance on a finite library of pre-authored animations. As a result, most previous embodied IVAs generate behaviors using traditional animation databases, which often makes them appear rigid and robotic.

The Challenge of Large Language Models

Recent advances in artificial intelligence (AI) have demonstrated the remarkable ability of Large Language Models (LLMs) to simulate human-like conversations. However, these systems face a fundamental limitation: they are disembodied. Their intelligence is mainly based on statistical patterns in large text datasets, which separates them from the rich, multi-sensory inputs such as vision and touch that ground human language in physical experience. As a result, LLMs often struggle with reasoning skills related to spatial or body-centered concepts, and typical conversational IVAs powered by LLMs alone cannot intuitively react to spatial or non-verbal cues, such as users’ gestures like looking away or pointing in a direction. However, these abilities are essential for effective human-AI collaboration.

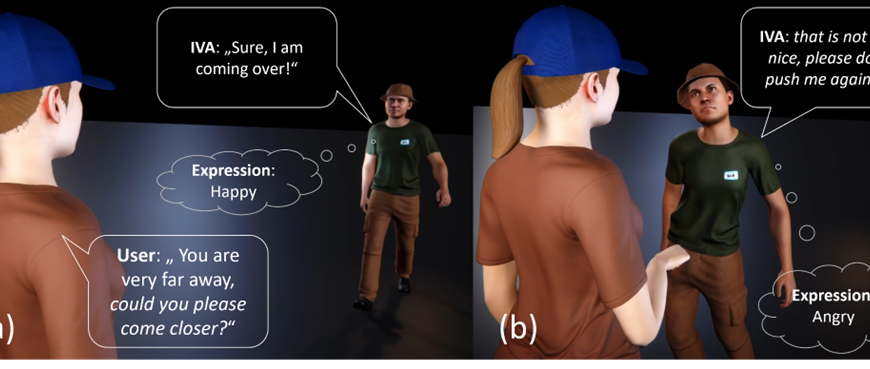

At IEEE ISMAR 2025, UHAM and ARTANIM presented a joint demo of an Intelligent Virtual Agent (IVA) in VR, driven by a physics-based controller. This enables dynamic, unscripted behavior like autonomously maintaining social distance. Moreover, our system links physical interactions, such as a user pushing the agent, to a Large Language Model (LLM). The LLM generates context-aware verbal responses to the physical event, bridging the gap between low-level motor control and high-level decision making. In the demo session, attendees can directly test the robustness of these integrated social and physical behaviors. This is, to our best knowledge, the first demo to couple emergent, physics-based reactions with LLM-driven intelligence running on a standalone immersive HMD.

ISMAR 2025 Demo: A Conversational Virtual Agent with Physics-based Interactive Behaviour

Link to demo: https://youtu.be/7PpGLah1TW8