by Erik Wolf – Universität Hamburg

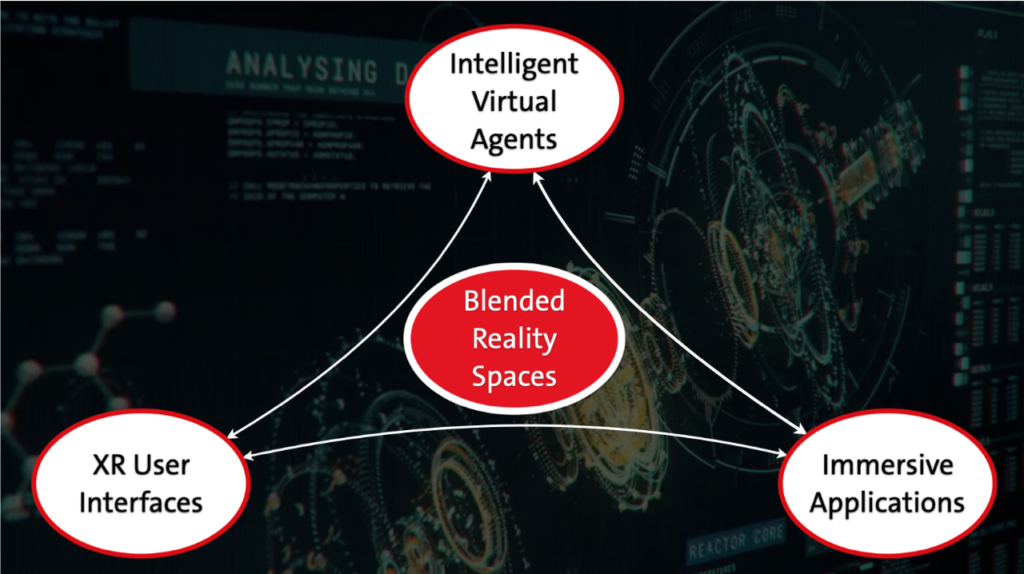

In the rapidly evolving landscape of eXtended Reality (XR), our cutting-edge research project, PRESENCE aims to push the boundaries of what’s possible. In this blog post, we introduce one technical highlight of the project: Intelligent Virtual Humans. As one of PRESENCE’s three technology pillars, they are set to transform how we collaborate, train, and interact within virtual environments. Let’s dive into what makes these virtual beings so unique and how they are brought to life.

What are Intelligent Virtual Humans?

Intelligent Virtual Humans are highly realistic digital representations designed to mimic human behavior and appearance. They can serve as smart avatars, realizing embodied self-representations of users in virtual environments, or as intelligent virtual agents, embodied representations of advanced human-like computer algorithms. Within PRESENCE, Intelligent Virtual Humans are supported by advanced AI and neural network technologies, allowing them to interact naturally and effectively with users. The magic lies in their ability to not just “look” human, but to “feel” human by responding appropriately to certain verbal and non-verbal cues.

How are such Virtual Humans created?

The cornerstone of our Intelligent Virtual Humans will be a efficient, user-friendly pipeline for generating high-quality humanoid 3D models. Traditionally, creating these models requires complex setups like multi-view camera domes run by experts. Within PRESENCE, we will simplify this process significantly. By using just a simple mobile camera setup, we capture images and RGB-D videos of the user’s head showcasing different facial expressions, like speaking, smiling, or nodding. Our advanced algorithms then process these videos to extract the best frames to create a personalized face model and a set of facial blendshapes. These blendshapes are the building blocks for our digital faces, allowing for a wide range of facial expressions and movements. Once the head model is ready, we normalize the input data (eliminating specularity, shadows, and image artifacts) and apply relighting methods. This ensures that our digital humans look consistent and authentic across various virtual environments, adapting to different lighting conditions and other requirements seamlessly.

For further information on the generation of Virtual Humans, please see the blog post of our project partner DIDIMO.

How are the Virtual Humans animated?

A major focus of PRESENCE is enhancing body-centered interaction and human-agent collaboration. Using the rigged humanoid 3D models generated, our Intelligent Virtual Humans will learn to perform specific tasks by observing examples of actors executing those actions. This advances the field significantly, applying recent developments in physics-based character animation to social interaction in XR, an unexplored territory until now. This innovation isn’t just practical, it contributes to the scientific advances related to AI-based XR, opening new avenues for research and application.

Moreover, AI-powered character animations respond to physical constraints for more plausible movements, enhanced further by deep reinforcement learning techniques. These developments enable our Intelligent Virtual Humans to interact dynamically, following social norms such as proxemics (the use of personal space in interaction).

For further information on the animation of Virtual Humans, stay tuned for the blog post of our project partner ARTANIM.

How can I interact with these Intelligent Virtual Humans?

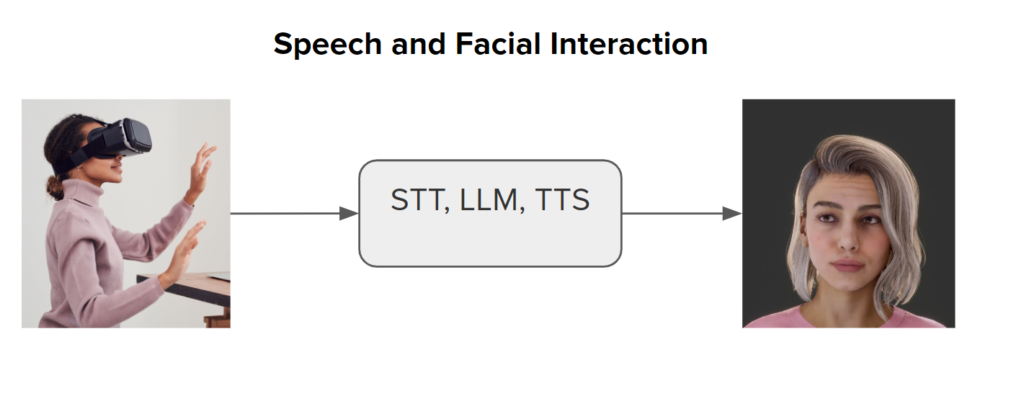

PRESENCE brings intelligence to Virtual Humans by integrating natural interaction methodologies. By leveraging advances in AI such as pose recognition (using systems like OpenPose or PoseNet) and natural language processing (using technologies like GPT-4 or LLAMA 3), our Intelligent Virtual Humans can process and respond to both verbal and non-verbal cues. This allows for interactions that closely mimic real-life human communication.

Our Intelligent Virtual Humans will be capable of a broad range of interactive behaviors. They can analyze and synthesize various vocal, visual, and verbal behaviors to match or synchronize with users in real time. Voice models can be trained on individual traits like pitch and accent to create authentic voice clones, while speech-driven animation models (like Omniverse Audio2Face) ensure natural facial expressions.

However, PRESENCE doesn’t stop at dyadic (one-on-one) human-agent interactions. Our research extends to group dynamics by analyzing typical gaze and dialogue behavior patterns in group discussions. These insights will then be incorporated into our Intelligent Virtual Humans, making them capable of participating in more complex group interactions.

While our Intelligent Virtual Humans offer a high degree of realism and interaction sophistication, it’s important to note that their intelligence is narrow and tailored to specific applications. Nonetheless, PRESENCE will provide means for easy customization to various use cases to bridge the gap between narrow and general AI.

For further information on the natural interaction with Virtual Humans, stay tuned for the blog post of our project partner JRS.

What comes next?

The future of XR holds immense potential for using Intelligent Virtual Humans. PRESENCE is at the forefront of this exciting journey, leveraging innovative technology to create digital interactions that feel natural, engaging, and transformative. Whether you’re new to the world of Virtual Humans or an expert, our efforts aim to make these digital entities a versatile and powerful tool in various domains.

Stay tuned for more updates as we continue to explore and expand the possibilities of Intelligent Virtual Humans within PRESENCE!

About the Human-Computer Interaction (HCI) group at the Universität Hamburg

The Human-Computer Interaction (HCI) group at the Universität Hamburg (UHH), headed by Prof. Dr. Frank Steinicke, is recognized for its focus and expertise on real-time interactive computer systems targeting the field of XR. Within the PRESENCE project, Frank receives support from Dr. Fariba Mostajeran and Erik Wolf, both experienced computer scientists and trained HCI experts focusing on XR technologies.

As a core partner of the PRESENCE project, the team of the HCI group is responsible for the entire project’s technical coordination, ensuring the seamless integration and effective evaluation of the project’s three technical pillars. It further contributes to the user-centered design and evaluation process of the four technical demonstrators that aim to showcase the project’s technical capabilities in professional and personal applications. As the main task, the team from the HCI group is leading the development and integration of the here-introduced Intelligent Virtual Humans within the project. The team is responsible for implementing realistic speech and facial interaction with Intelligent Virtual Humans and integrating the solutions of the above-mentioned project partners working on realistically reconstructed humanoid 3D models, physics-based animations, and advanced action recognition to ultimately realize advanced social multimodal interaction with realistic Intelligent Virtual Humans.