Over the past two decades, innovations in 3D sensing technologies have transformed how we capture and interact with 3D environments. Affordable and portable sensors, like Microsoft’s Kinect and Intel’s RealSense, have made 3D capture more accessible to consumers and researchers. 3D facial recognition—now commonly used to unlock smartphones—shows how these advancements have become integrated into everyday devices.

Meanwhile, LiDAR, once limited to specialized applications, is beginning to be used in mobile devices, expanding depth-sensing capabilities and enabling innovative solutions in augmented reality. At the high end of the spectrum, photogrammetry domes are widely used in film and video game production to create highly detailed 3D models and assets.

The PRESENCE project seeks to expand these capabilities by enabling real-time capture and transmission of immersive 3D environments, aiming to create a true sense of presence. Achieving this requires advanced volumetric capture technology that delivers high-quality, real-time 3D reconstructions.

Capturing a Sense of Presence

In immersive experiences like Extended Reality (XR), the sense of presence depends heavily on how quickly and detailed a system can capture and reconstruct dynamic 3D scenes. Even with high-quality visuals, delays in processing can diminish the user experience. For this reason, real-time volumetric capture is essential for achieving seamless, interactive environments.

While 3D sensing technologies like Kinect and RealSense offer real-time capture, they lack the resolution required for professional, high-fidelity applications. Photogrammetry domes, on the other hand, provide exceptionally detailed 3D models but are too complex and static to be practical for real-time, on-location use. This creates a gap in the industry, particularly in scenarios that demand portability and real-time volumetric capture with high-quality outputs.

Light field cameras, supplied by Raytrix for the PRESENCE project, bridge this gap. These cameras capture both the spatial and angular information of light in a single shot, reducing the number of cameras needed for volumetric capture. Unlike photogrammetry, light field cameras offer manageable data flows for real-time reconstruction and can be deployed outside of stationary setups, all while providing higher fidelity than consumer-grade sensors like Kinect or RealSense.

The Operating Principle of Light Field Cameras

Although light field cameras are often known for their refocusing and MultiView capabilities, their primary advantage in the PRESENCE project lies in their ability to capture both 2D and 3D data in a single shot.

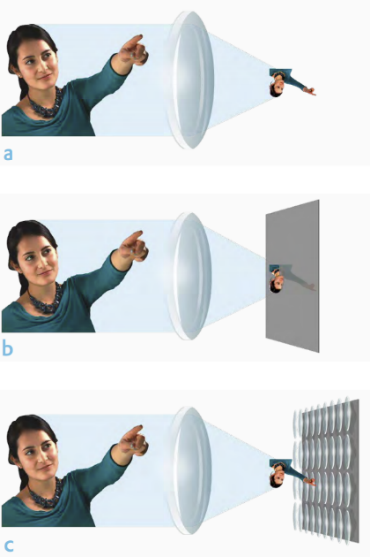

- A lens produces a 3D representation of the world.

- Conventional 2D cameras flatten the 3D world into a 2D image by recording only light intensity.

- In contrast, light field use a microlens array between the main lens and the sensor to capture angular data, allowing the system to infer depth and spatial relationships from just one shot.

Each microlens acts as a miniature camera, recording light from slightly different angles. This allows the camera to capture comprehensive information about the scene, including the direction of light rays. By embedding depth information directly into the image data, light field cameras facilitate real-time 3D reconstruction.

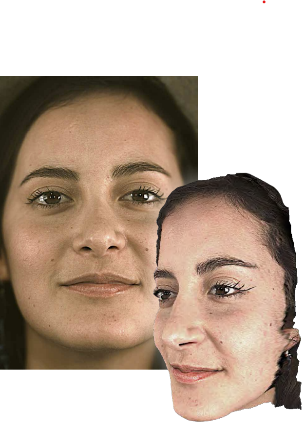

Representation of a micro-lens image (left), and a color image with 3D reconstruction (right)

In the PRESENCE project, up to four light field cameras are used to achieve volumetric capture. Since each camera independently perceives depth, far fewer cameras are needed compared to photogrammetry domes, which rely on dozens or even hundreds of cameras.

A High-Quality Light Field?

We’ve previously emphasized the need for high-quality 3D reconstruction to convey a true sense of presence. However, light field cameras are traditionally known to reduce spatial resolution due to the way they capture angular information. In the PRESENCE project, this challenge is addressed by using a light field variant known in the scientific literature as Plenoptic 2.0.

Unlike the more common Plenoptic 1.0, which forces a trade-off between angular and spatial resolution, Plenoptic 2.0 focuses the microlenses on the image plane of the main camera lens rather than directly on the sensor. This increases angular and spatial resolution simultaneously, providing the high-quality data needed for immersive, real-time 3D reconstructions.

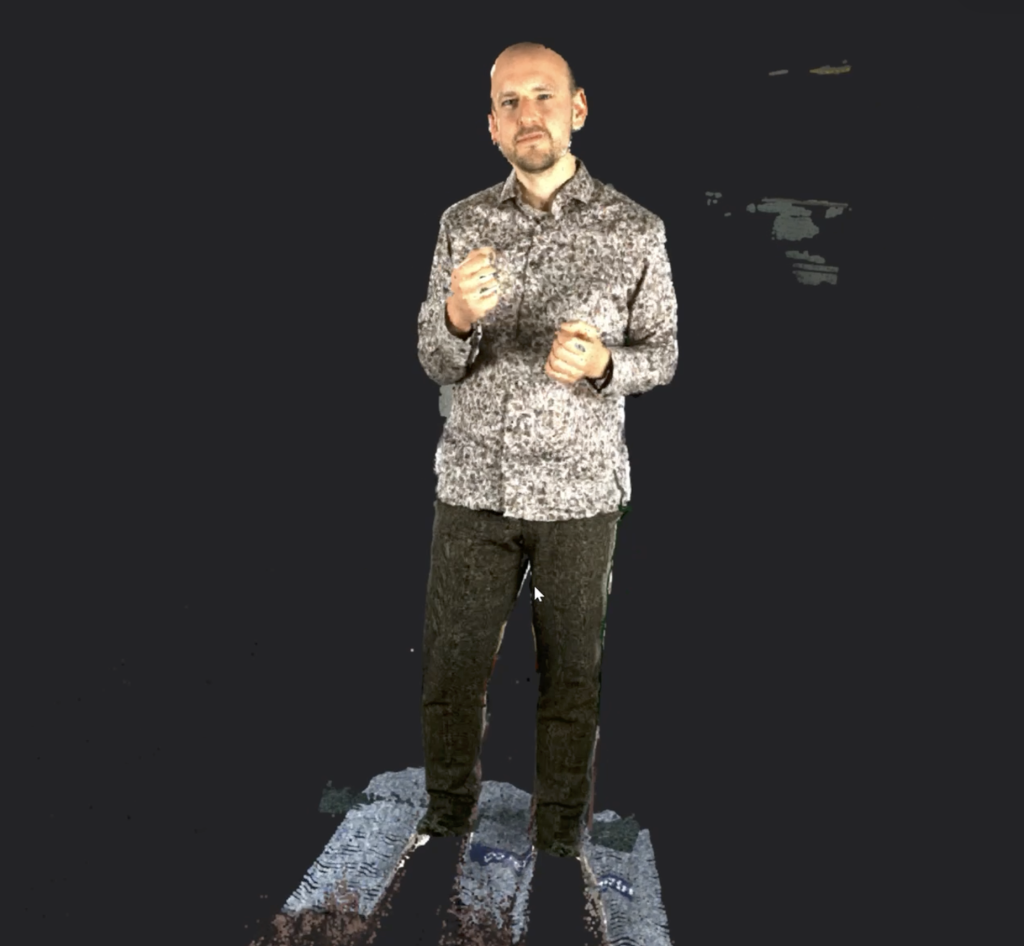

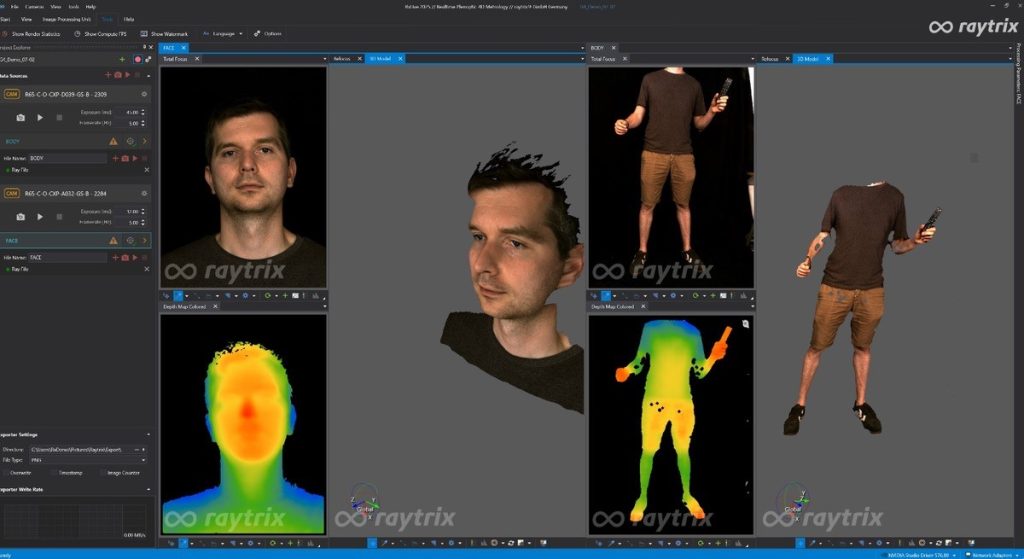

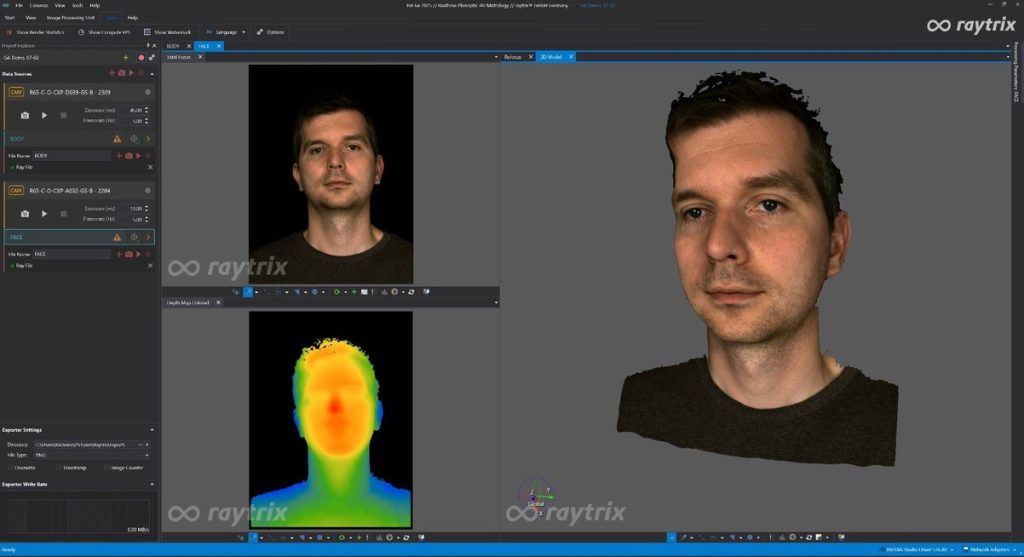

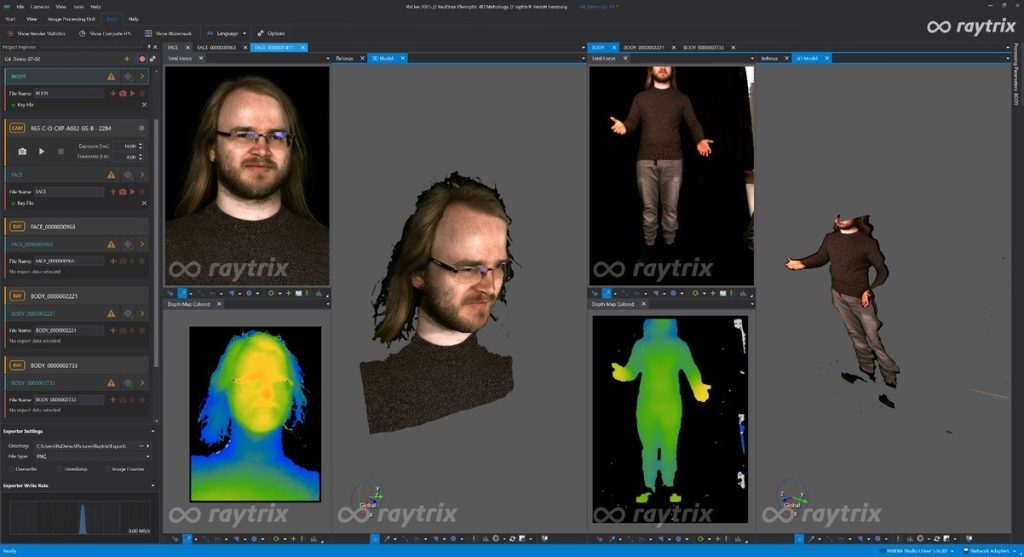

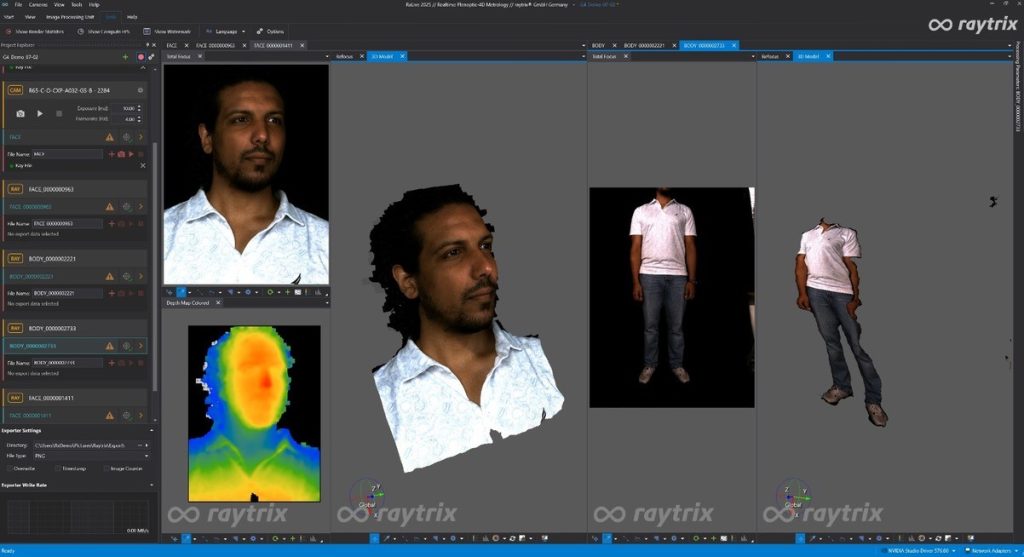

Screenshots from RxLive, Raytrix Light Field capture and processing software.

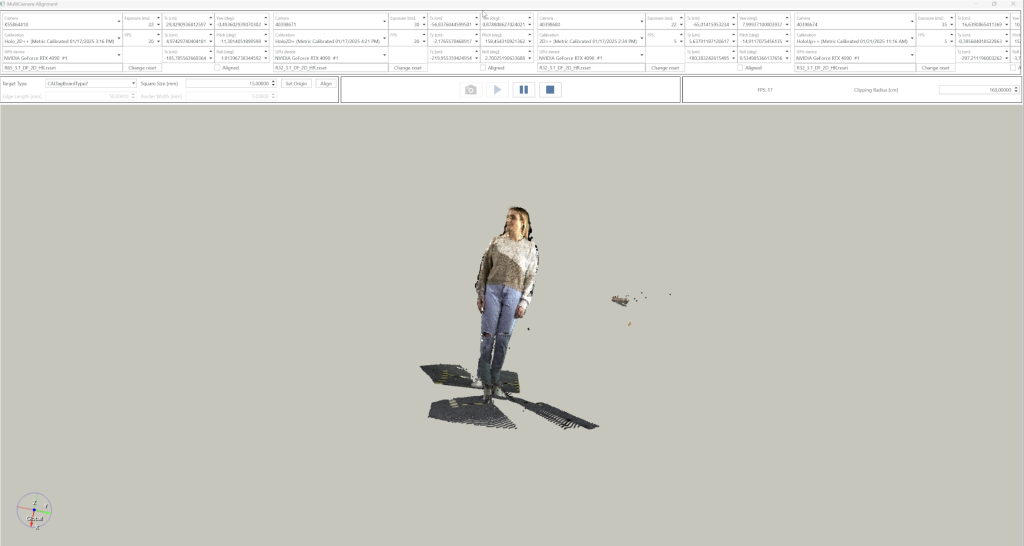

Screenshots from the fused individual light field streams: