by Theofilos Tsoris – CERTH

Introduction

In immersive XR applications like Holoportation, achieving high-quality 3D reconstruction depends heavily on precise multi-view calibration. But what happens when the camera setup is sparse, with minimal field-of-view (FOV) overlap? This is the case in the PRESENCE project, where we try to achieve optimal reconstruction results with few light-field cameras due to cost and complexity issues. In such challenging scenarios, accurate spatial calibration becomes not just important, but absolutely critical.

The Challenge of Low-Overlap Multi-View Calibration

Traditional multi-view calibration techniques often assume that cameras share significant overlapping views of the scene. However, in our case, cameras are deliberately spaced apart to cover larger areas of the scene, resulting in limited visual overlap. This low-overlap configuration makes it extremely difficult for conventional calibration methods to find common reference points across all views, which in turn affects the consistency and quality of the final 3D reconstruction.

Why Calibration Quality Matters in 3D Reconstruction in Holoportation

In 3D Reconstruction, poorly calibrated camera setups can lead to visible misalignments, jittering, or ghosting in the reconstructed human model and the scene in general. These artifacts can ruin the immersive experience and limit the technology’s effectiveness in applications like Holoportation, remote collaboration, and remote healthcare. That’s why evaluating and choosing the right calibration algorithm is essential for delivering seamless and realistic 3D presence.

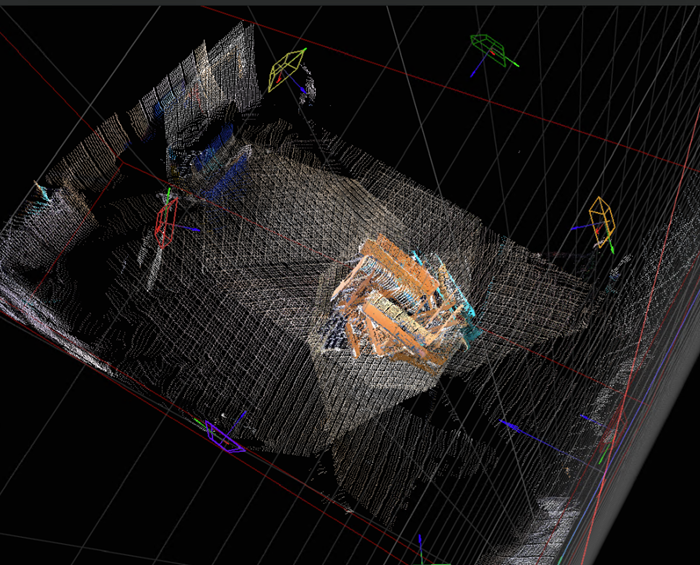

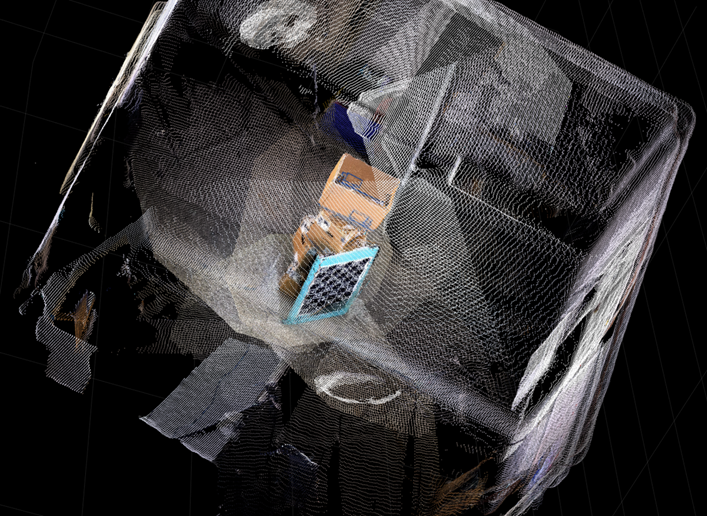

3D point cloud reconstruction of a scene with stacked boxes in the middle. We can observe the severe impact in reconstruction of a poorly calibrated scene (top) and a quite accurately calibrated one (bottom).

Comparing Algorithms: From Classic to Neural Approaches

To address these challenges, our team at CERTH’s Visual Computing Lab undertook a rigorous evaluation path of both conventional and modern calibration algorithms. We benchmarked methods like MC-Calib (a reliable multi-camera calibration technique) and contrasted them with recent neural-based approaches such as Lepard and RoITr which perform point cloud registration using learned geometric features along with conventional ICP-based point cloud registration techniques (GICP+). Each method has its strengths, but also weaknesses that become more apparent under low-overlap settings.

The Role of Synthetic Data in Calibration Research

One of the bottlenecks in multi-view calibration research is the lack of ground truth data. In real-world scenes, it’s nearly impossible to know the exact calibration parameters. This makes algorithm comparison subjective and error-prone. To overcome this, we developed SYNCH2-Cal, a synthetic dataset generation and evaluation framework. It creates realistic scenes with fiducial and pattern-based targets, as well as adjustable camera viewpoints with known ground truth calibration.

Introducing SYNCH2-Cal: A Synthetic Dataset Workbench for Faster Progress

SYNCH2-Cal allows us to systematically evaluate calibration and registration algorithms under controlled conditions. It supports both conventional calibration methods using fiducial markers and pattern-based targets, as well as point cloud-based approaches—enabling the rigorous evaluation of both traditional, ICP-based and neural methods. The framework generates both RGB images and 3D point clouds of the virtual cameras viewpoints of the virtual scene and provides precise ground truth for spatial calibration, offering a highly controlled testbed. In addition, it features an extensive set of configurable parameters, including camera pose, origin, target configuration, and even random cameras’ perturbations and 2D noise and lens distortions. This makes SYNCH2-Cal an ideal platform for ablation studies, allowing researchers to pinpoint the strengths and weaknesses of each algorithm in terms of accuracy, robustness, and scalability—especially under low-overlap, real-world conditions.

Synthetic scene with fiducial Charuco markers produced by SYNCH2-Cal (RGB camera views)

Synthetic 3D scene with fiducial Charuco markers produced by SYNCH2-Cal (merged point clouds of 8 cameras).

Why It Matters

Our work shows that synthetic datasets aren’t just an academic exercise — they are a practical necessity for improving real-world XR systems. By using SYNCH2-Cal, we’ve been able to identify failure cases in current algorithms and plan new directions for more robust multi-view calibration in low-overlap scenarios. The ability to evaluate both conventional calibration techniques and modern point cloud registration algorithms on the same controlled dataset enables detailed comparisons and objective benchmarking. Moreover, by simulating complex camera configurations and introducing controlled perturbations such as pose noise and image distortions, SYNCH2-Cal allows us to simulate real-world setups and ultimately understand how different algorithms respond to real-world challenges. This insight is critical for guiding improvements in calibration strategies and developing solutions that are both scalable and robust in diverse XR environments.

Conclusions

As XR moves from the lab to the real world, the challenge of calibrating sparse, low-overlap camera systems will only grow more relevant. Through workbenches like SYNCH2-Cal and thorough evaluation of both classic and neural approaches, we’re not just solving today’s calibration problems —we’re laying the groundwork for next-generation Holoportation systems that are more accurate, adaptable and immersive than ever before.

References

- “SYNCH2-Cal: A Synthetic Framework for Benchmarking Multi-View Calibration and Point Cloud Registration” has been submitted for admission to VCIP 2025 conference.

- E. Koukoulis, G. Arvanitis and K. Moustakas, “Unleashing the Power of Generalized Iterative Closest Point for Swift and Effective Point Cloud Registration,” 2024 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 2024, pp. 3403-3409, doi: 10.1109/ICIP51287.2024.10647551.

- Rameau, F., Park, J., Bailo, O. and Kweon, I.S., 2022. MC-Calib: A generic and robust calibration toolbox for multi-camera systems. Computer Vision and Image Understanding, 217, p.103353.

- Li, Y. and Harada, T., 2022. Lepard: Learning partial point cloud matching in rigid and deformable scenes. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 5554-5564).

- Yu, H., Qin, Z., Hou, J., Saleh, M., Li, D., Busam, B. and Ilic, S., 2023. Rotation-invariant transformer for point cloud matching. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 5384-5393).